TLDR: Major teams are building confidential AI infrastructure to store and process sensitive workloads. TEEs, confidential compute, and verifiable logs can produce millions of rows of attestations and data per user.

The real challenge is this: how do you resolve disputes, run audits, and answer questions in the middle of a crisis quickly and clearly enough to retain user trust?

—--------------

Recently there has been acceleration toward confidential AI infrastructure for enterprise, government, regulated industries, and soon end user data.

Teams like Google Project Oak, Apple’s Private Cloud Compute, OpenAI, Phala, Brave and Telegram’s Cacoon AI have all converged on a similar view: device-side compute is not enough, and server-side confidential compute will be necessary.

This trend is interesting to me because I have spent time with privacy chains and wallet infrastructure providers that need to prove the integrity of their data to someone else. Think of real world audits and queries in the middle of a crisis situation.

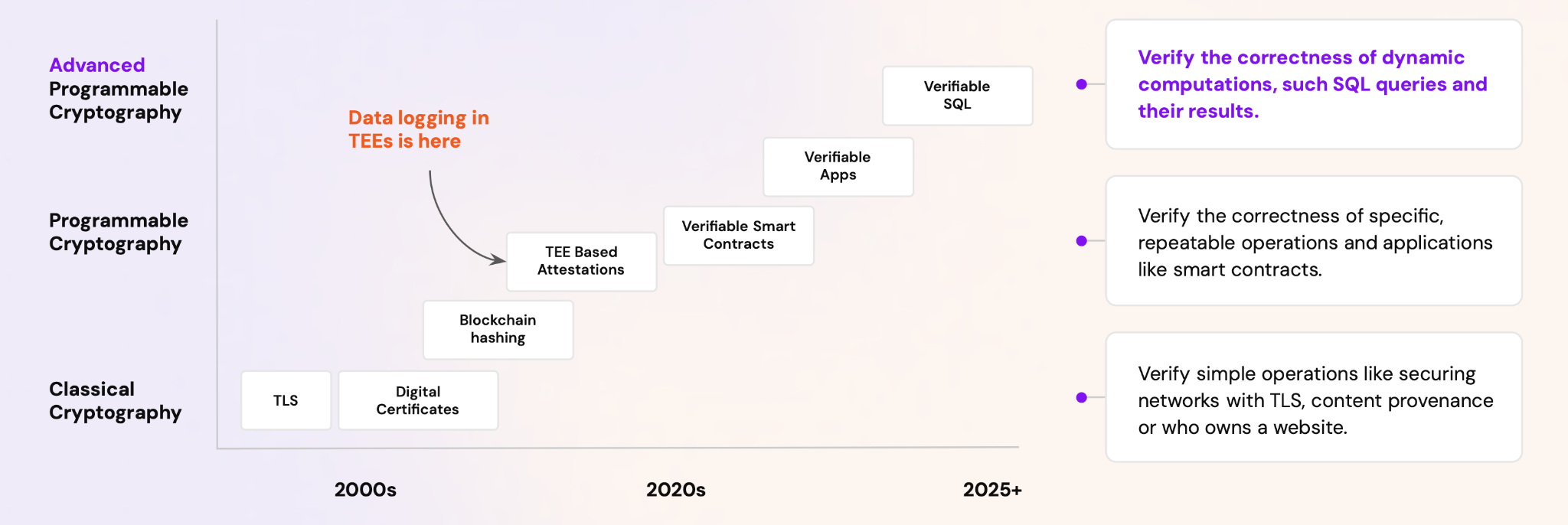

A takeaway from these conversations is that confidential computing stacks, blockchains, and verifiable logs all play a similar role. They capture, with integrity, the state of the infrastructure, the models or applications that are running. Verifiable logs capture events in data pipelines, data access and policy changes.

The hard part starts after you have built this beautiful confidential stack. As an engineer you might be proud of the system, but how do you prove this to millions of users and to auditors in a way they can actually use?

Most end users, even technical ones, are not going to audit your code or read your enclave configuration. They will rely on the broader community to validate that. What they care about is what happened to their data.

High level attestations about which version of an application was running at a given time are not too hard. You can show that in a dashboard, and a third party can verify those attestations.

So the unsolved part is verifying what is happening with the data: from consents changing, queries being answered, data flowing between external systems, and models being trained or fine tuned on user data. This is where user experience matters most and where things usually break.

Take a user called Bob. He logs into a service that runs on a confidential computing environment and notices that his consent has changed.

Questions immediately come up in his mind:

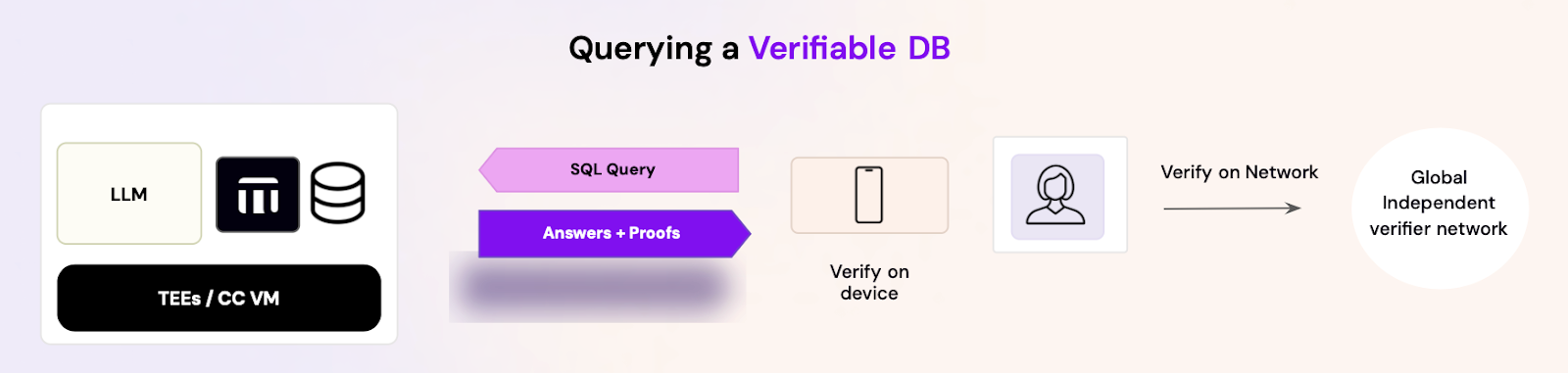

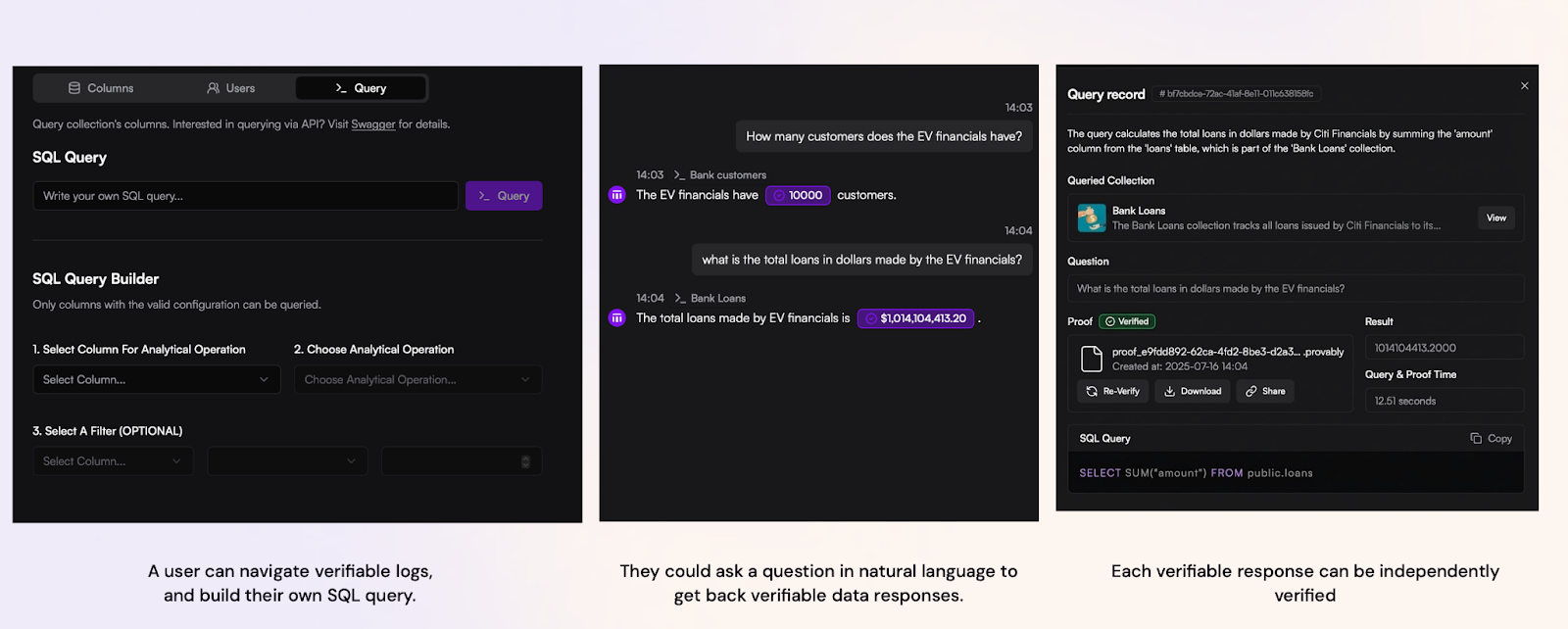

Bob needs a user interface where he can ask these dynamic, spontaneous questions. He also needs a way to verify the answers he gets from the remote confidential system.

Right now there is no standard user interface for this in Web2.

On blockchains, users know to use explorers to look up transactions and balances. But those explorers are run by trusted operators and suitable for atomic data look ups. There isn't yet a fully verifiable explorer that allows fast verifiable data queries.

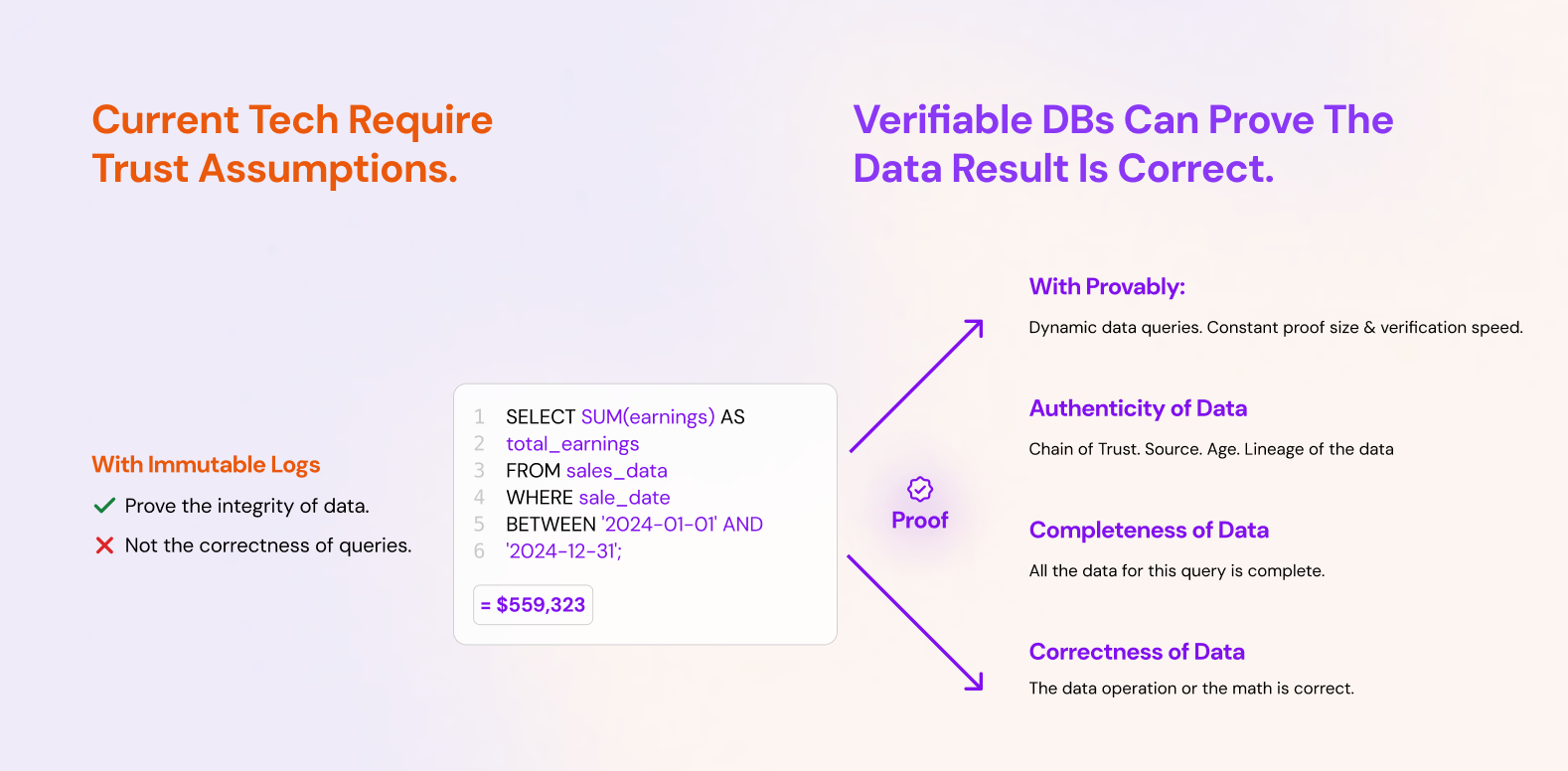

In non blockchain scenarios, a provider might have an append only or immutable log but they are really hard to prove to someone else. In most cases, providers send raw log files or expose logs via APIs. This is not good enough because the end user - be it technical or non technical cannot verify the integrity of this data.

So, more and more teams are either using a TEE to attest their data, or a blockchain to timestamp and anchor the logs. Let us call these “verifiable data logs” because they are cryptographically verifiable by a remote user. That leads to the final set of questions:

If you have verifiable data, how does a remote user succinctly query that data?

And how can you prove to the end user you did not deliberately withhold certain rows of verifiable data in response to a question?

Even when using an Authenticated Data Structure like a Merkle Tree, there is no way for a remote user to detect this, unless they download the entire dataset, check if their authenticated data structure is consistent with the remote system and then independently run all the queries locally.

To solve these problems, I think the future is verifiable explorers and dashboards enabled by verifiable databases. Verifiable databases can prove both the integrity of their data and queries without the user performing complex and time consuming verification tasks when under emotional and time stress.

Verifiable databases, and those built by Provably are a middleware that plug into traditional databases like Postgres and return verifiable SQL query responses. In this scenario, when a user runs a query like:

“When did my consent change?”

they get back the normal query result, and a 1 small proof (1kb) they can verify independently.

That verification tells them:

The query can be issued through an explorer, a natural language interface where an LLM returns a verifiable proof along with the answer, or through an MCP server that connects to the confidential environment.

The future of audit and fast dispute resolution is simple: answer dynamic data questions with a single proof at speed on big data. This is the sharp edge where users can actually experience confidential computing, not just read about it in the branding and documentation.

We have already spoken to multiple wallet infrastructure teams and privacy centric blockchains, and this pattern seems to be emerging as a new standard for proving the integrity of data responses from confidential computing environments. We also believe AI models will increasingly prove the integrity of their answers.

Here are some materials on how Provably s Verifiable Databases work:

If you want to use our verifiable database or explorer, contact us. If you want to support our research on faster proving on large datasets, verifiable indexing, verifiable data networks, or verifiable vector databases, reach out.