In the world of Zero-Knowledge (ZK), the "Circuit" has been the foundational abstraction for years. The industry standard has been clear: if you want to prove a statement is true, you translate your logic into a set of mathematical constraints.

But for developers building data-intensive applications, this creates a massive headache. Modern software is designed to be flexible and to evolve over time. We write code that changes, branches, and evolves as requirements grow. Databases follow a similar pattern: they allow teams to modify schemas, update data models, or change queries in seconds. This flexibility is a core assumption of how modern applications are built and maintained.

The "Circuit" model, by contrast, relies on rigidity. Using it feels less like writing software and more like designing hardware. You have to pre-calculate every "wire" and "gate" before you’ve even processed a single row of data.

Mapping a dynamic database onto a rigid circuit model makes development complex and inflexible; development speed just slows down, like hitting a wall.

The "Circuit" model operates by transforming logical statements into a static system of mathematical equations. In this paradigm, every potential path of a query must be defined upfront, creating a fixed structure where inputs and outputs are determined the moment the circuit is compiled.

Because the proof depends on the specific mathematical structure of the circuit, the system remains locked to the requirements defined at the moment of creation. The logic is effectively frozen into the architecture. When a data model evolves or a query requires a new filter, the static circuit can no longer accommodate the change.

So, how do we get the trust of a ZK-style system without the headache? The answer lies in Verifiable Databases (VDBs).

In the past, developers had to choose between two extremes. On one side were "lightweight" solutions, often called Authenticated Data Structures (ADS). These were fast and intuitive but could only prove simple things—like whether a specific row existed. They were stuck when it came to complex logic like calculating sums or joining tables. On the other side were "General Purpose SNARKs" that tried to turn every SQL query into a massive, complicated circuit. While powerful, they were so heavy and complex. Neither really worked for real-world development.

QEDB represents a shift in thinking. Instead of forcing your data to fit into a rigid cryptographic circuit, the cryptography is redesigned to fit the data. It emerges from the conviction that we can merge the best of both worlds: a return to those intuitive, lightweight ADS roots, but equipped with a modern algebraic engine.

Think of it as the difference between building a custom calculator for every single math problem (the Circuit approach) and having a powerful engine that already understands the language of data. Qedb abstracts your database tables into mathematical primitives: columns are treated as polynomials and sets. On top of this basic abstraction, QEDB provides a set of primitives that allow you to prove a subset of the SQL logic without changing a single line of code.

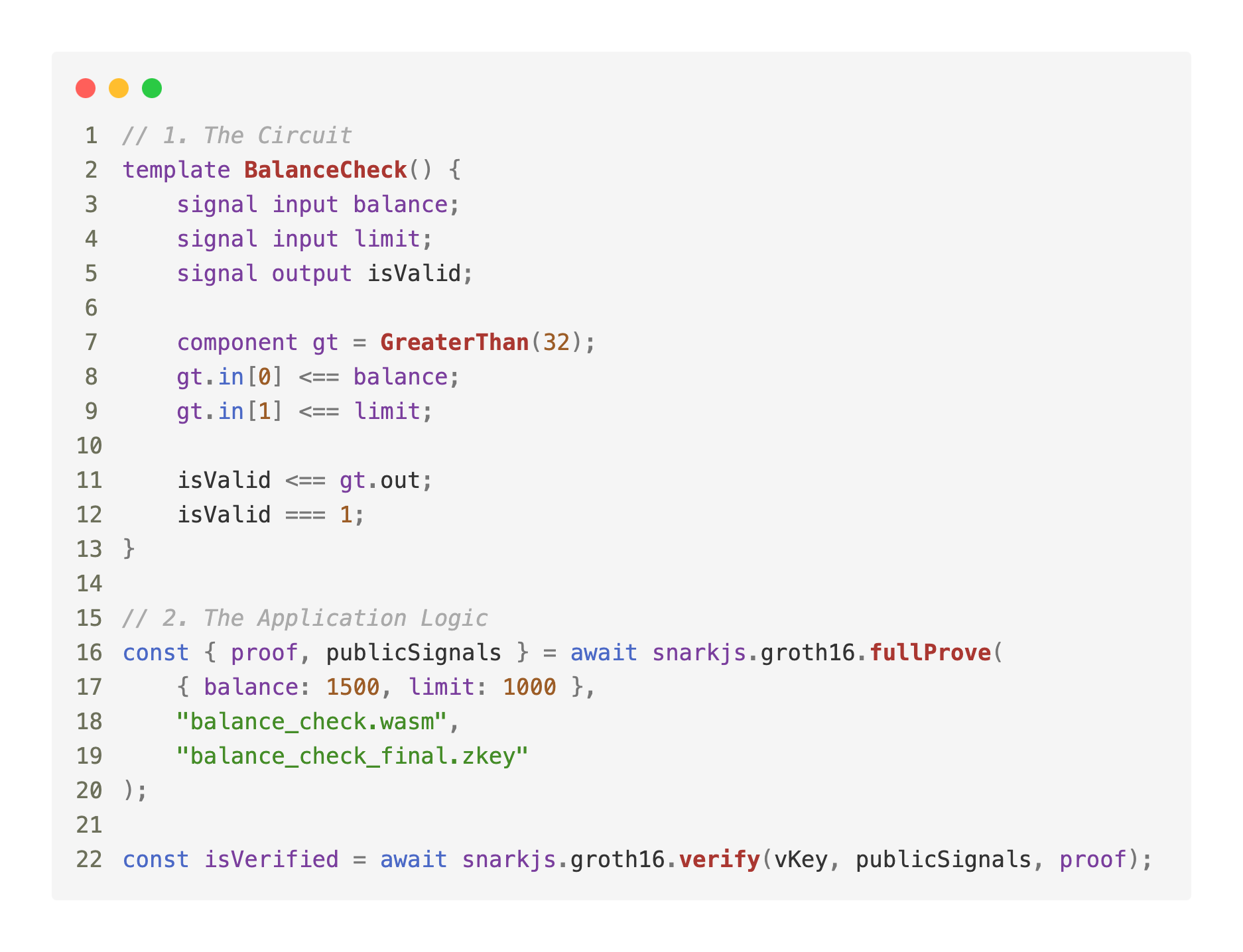

To understand why the "Circuit" model causes such a headache, you have to look at the code. Imagine a simple requirement: Prove a user’s balance is over 1,000 without revealing the actual number.

In a circuit-based world, you aren't writing an "if" statement. You are building a mathematical proof from scratch. Because most ZK circuits don't inherently understand concepts like "greater than," you have to manually decompose numbers into bits just to compare them.

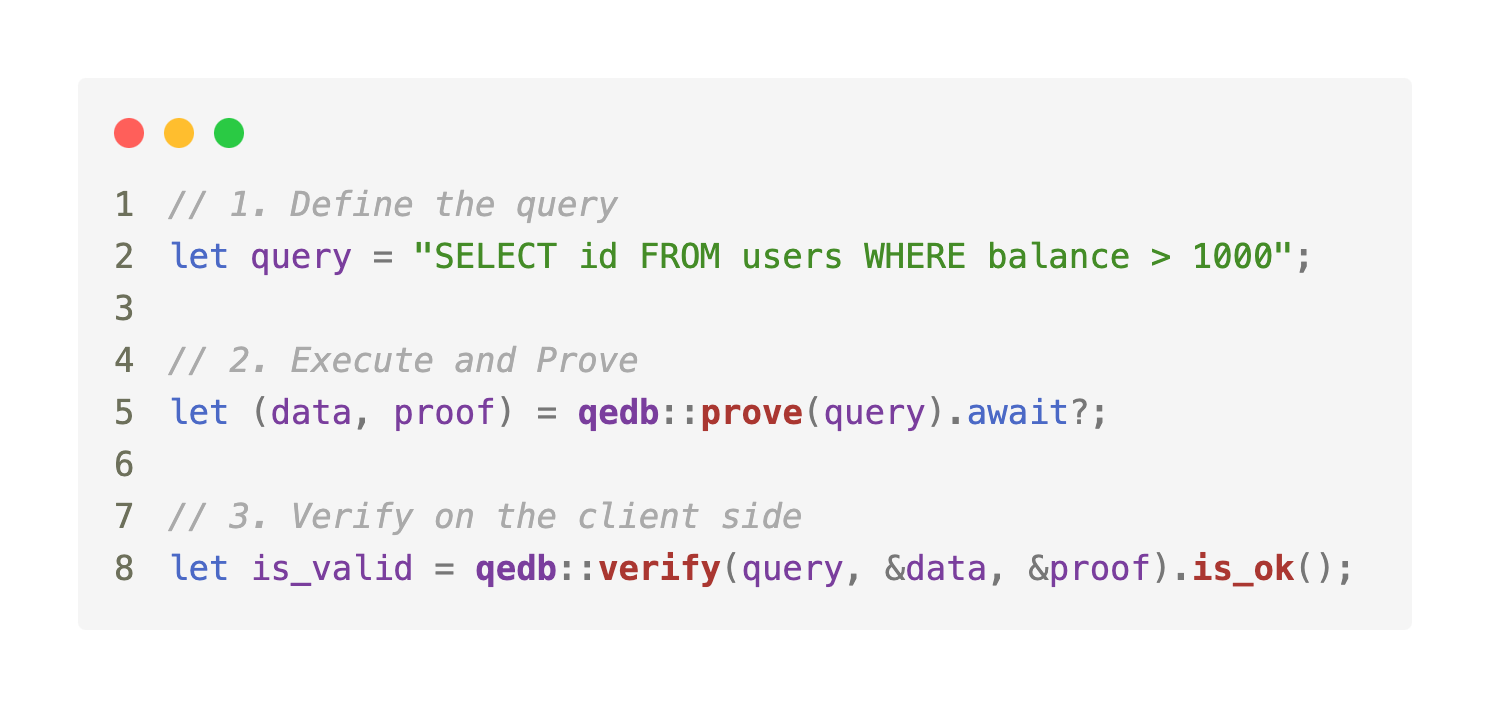

With QEDB, the "circuit" is the database engine itself. It already knows how to handle math, strings, and complex logic. You don't build the proof; you just describe the data you want.

In the QEDB model, the complexity is abstracted away. The database understands the relational model, so it knows how to prove filters, joins, and aggregations out of the box. If you need to change your query tomorrow, you just change the string.

Using a manual circuit means building a specific, unchangeable tool for every individual task. Using QEDB means using a flexible engine that is capable of proving any query you throw at it.

Remark. While the idea of a universal tool that could automatically stitch together Zero-Knowledge (ZK) circuits for any database operation sounds appealing, the reality of proving complex queries with technologies like zk-SNARKs or zk-STARKs tells a different story: you could theoretically build a generic, modular circuit system to handle elementary DB operations and concatenate them to verify a query. However, this approach is far less efficient—and therefore, far more expensive in terms of computational resources—than creating a custom, dedicated (ad hoc) circuit specifically tailored for the exact, end-to-end query you need to prove.

If you’ve spent any time in the ZK space, you know the reputation: it’s computationally expensive and notoriously slow. This rigidity doesn't just hurt the developer; it hurts the user. When you treat a database query like a generic math circuit, the system has to "simulate" every possible branch of logic, leading to a massive performance tax. As your dataset grows from 100 rows to 1,000,000, a general-purpose circuit starts to crawl.

QEDB takes a different approach. As detailed in our research paper "qedb: Expressive and Modular Verifiable Databases (without SNARKs)", the breakthrough lies in moving away from SNARK-based architectures entirely. Instead of a monolithic, hardcoded circuit, QEDB uses a modular framework that separates the logic of query verification from the underlying cryptography.

This architecture eliminates the "circuit tax" bottleneck by using specialized primitives like KZG polynomial commitments and Linear-Map Vector Commitments (LVCs) that fit well with tabular data. Because the engine isn't restricted by the rigid boundaries of a circuit, it can handle complex SQL queries with ease.

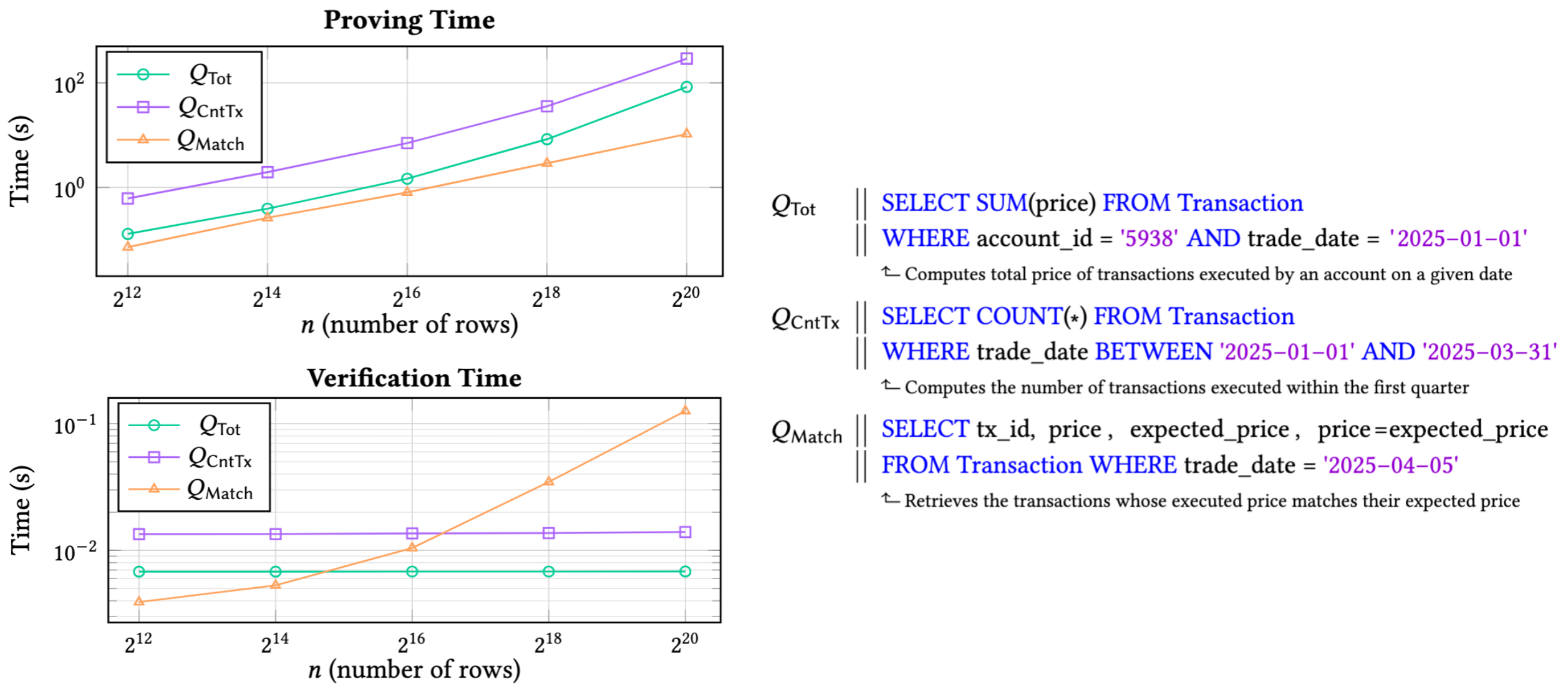

The result is linear scaling, where the time required to prove a query doesn't skyrocket as your database grows. You can verify millions of rows while keeping proof sizes small (and constant) and verification lightning-fast. Because the verifiability is baked into the database primitives rather than a monolithic SNARK circuit, you get constant-time verification where the "truth" of the data can be confirmed in milliseconds.

To see what this means in practice, consider these example queries and their corresponding performance metrics on a standard financial dataset.

The real power of this modularity is visible in how the system scales. As shown in the performance charts above, the time required to prove and verify queries like Qtot (sums), QCntTx, and QMatch remains remarkably efficient even as the number of rows reaches over a million records.

The landscape of verifiable data is shifting. For a long time, the only way to achieve trust was to lock logic into a rigid, mathematical box. But software is living; it needs to breathe, pivot, and scale without a complete cryptographic overhaul every time a requirement changes.