We are entering a golden age of AI agents, where they bring tremendous convenience to many aspects of our workflows and lives.

We want to see agents advising us and acting on our behalf using private data—for instance, calculating our taxes, managing our budgets, or helping us create data rich presentations.

Run a business and need more sales or help desk staff quickly? Well, AI Agents will appear to handle tasks autonomously so end users don't wait for 20 minutes on that helpdesk call. AI is perfect for all those places where we don't have enough people when we need them!

BUT, for both co-pilot and automation scenarios to go live, the critical questions are:

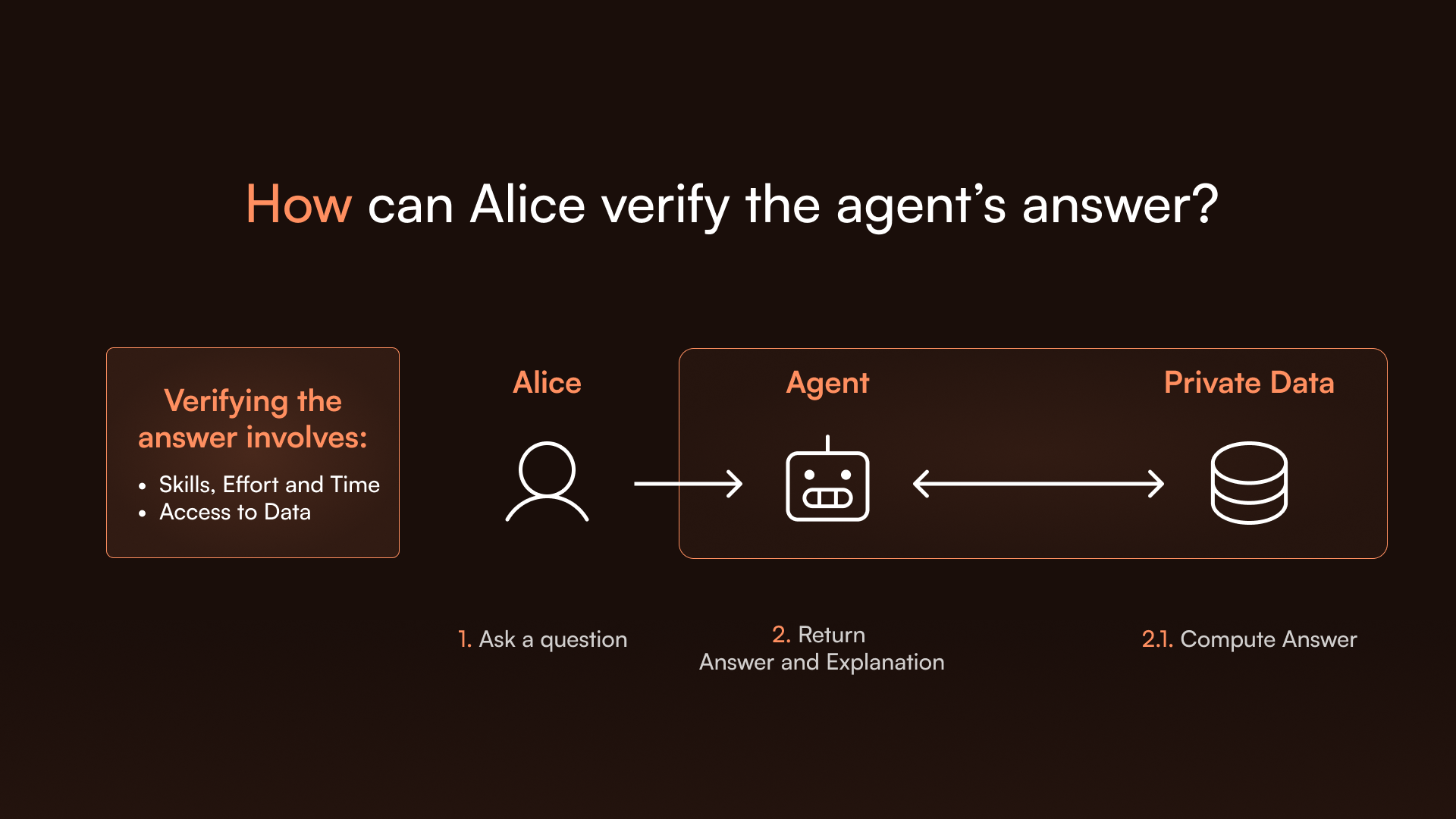

Users want to rely on AI answers with confidence, free from the worry of potential mistakes. They also do not want to spend time reading explanations and verifying the accuracy of these answers, - that creates unnecessary stress and anxiety.

One of the key reasons for not trusting AI is hallucinations. They occur when the large language models (LLMs) produce false, misleading, or entirely fabricated information that appears plausible but lacks any basis in reality. Hallucinations arise due to the model's inherent limitations in distinguishing between factual and non-factual information, especially when it relies solely on patterns learned from training data rather than verified external knowledge.

The current main way to reduce hallucination to a manageable level is grounding. Grounding in LLMs refers to their ability to generate responses that are accurate, relevant, and aligned with real-world facts or context. Grounding ensures that the model’s output is not only coherent but also factual and contextually appropriate, often achieved by integrating external data sources or databases during the response generation process.

While grounding is an excellent technique to battle hallucination, end users are still left to just blindly trust that methods used by developers produce factually correct results. For end users to truly trust AI systems, they need not only use grounding or similar techniques to reduce hallucinations, but also provide proof that the generated text is factually correct, even when the user does not have direct access to context used to generate the text.

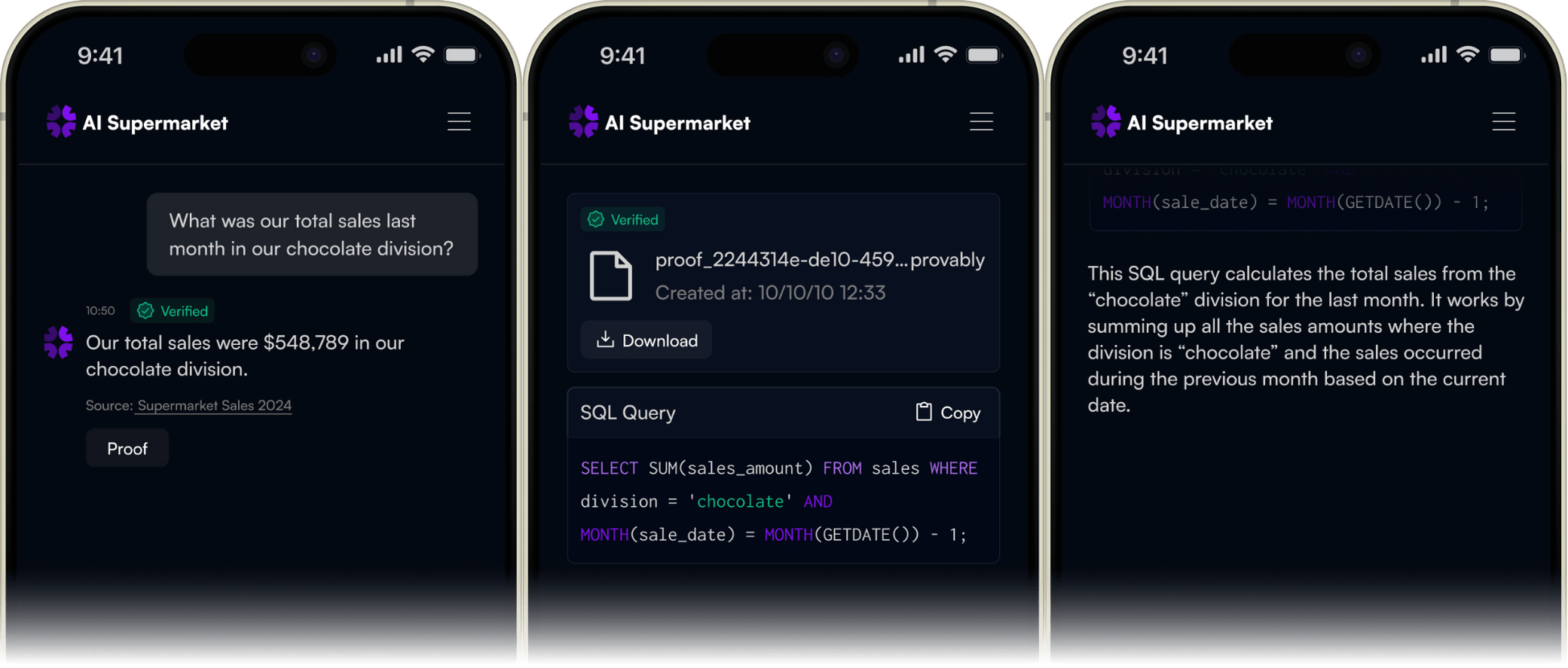

To solve these problems, we introduce a technique called Proving to ensure AI answers on private data are correct, every time, with results backed by cryptographic proofs. Provably has developed ZK protocols for SQL computations on databases and tabular structures, allowing agents to prove the correctness of their computations and enabling others to independently verify the results in less than 2 seconds.

The beauty of this approach is that recipients of AI answers—whether users, agents, or applications—don’t need to understand the systems behind the AI. They can verify correctness without concerning themselves with the specific technologies used, saving time and reducing complexity. For deterministic tasks like database queries, the end user only needs to focus on integrating the answers into their workflow without worrying about the truthfulness of the results.

Each answer provided by Provably includes a ZK proof along with metadata such as the SQL query, data source, result, and timestamp, all explained in plain English. Users can revise their prompts and re-query as needed. ZK proofs can be stored immutably, explained, repeated, and verified later to resolve disputes or address legal issues.

This approach enables AI agents to handle straightforward sales tasks, such as generating a bill for a shopping basket, as well as complex processes like loan origination, where the agent can conduct credit and risk analysis to help the end user and financial provider select the most suitable loan product.

On the privacy front, agents can make claims without revealing sensitive data. ZK identity allows agents to act securely on behalf of humans, enabling them to perform tasks involving ad hoc database computations while preserving user privacy.

Instead of LLMs interacting directly with data, they will increasingly generate precise and accurate code to communicate with databases. This capability will only improve over time.

Provably is a ZK middleware tool that connects to database management systems like PostgreSQL. The ZK protocols ensure that the query is executed correctly by the DBMS and the query answer contains all and only correct data. Our research focuses on generating proofs for large datasets efficiently.

Additionally, Provably includes a platform to manage multiple middleware instances, create AI-friendly private data catalogs, and govern secure access through a robust permission management system.

Proving is a powerful tool, and its middleware approach makes it accessible to all AI teams operating in any environment. This setup allows any agent to interact with databases via Provably for truthful verifiable results. Currently, Provably supports PostgreSQL, and we are actively working to expand compatibility to other databases. We also provide integration for custom tabular structures, such as application-specific data stores—contact us for tailored solutions.

Provably V1, currently supports aggregate SQL queries on databases with up to 1 million rows. To meet the growing demands of AI workloads, we are developing Provably V2. This new version will feature faster proving and verification, support for general SQL queries, and the ability to handle larger datasets.

High-speed, truthful answers are set to transform AI, and we’re thrilled to lead this charge. ZK technologies empower us to build “trustworthy systems” by enabling precise proof and verification of facts. For AI to deliver accurate results, data must be correct, questions understood, and computations flawless. The biggest opportunity in our eyes is to solve the User doubt over AI’s ability to perform accurate computations. By focusing on this key challenge, we offer developers an immediate solution that integrates seamlessly without complex migrations or overhauls.